Enhancing decision making with RAG chatbot

Description

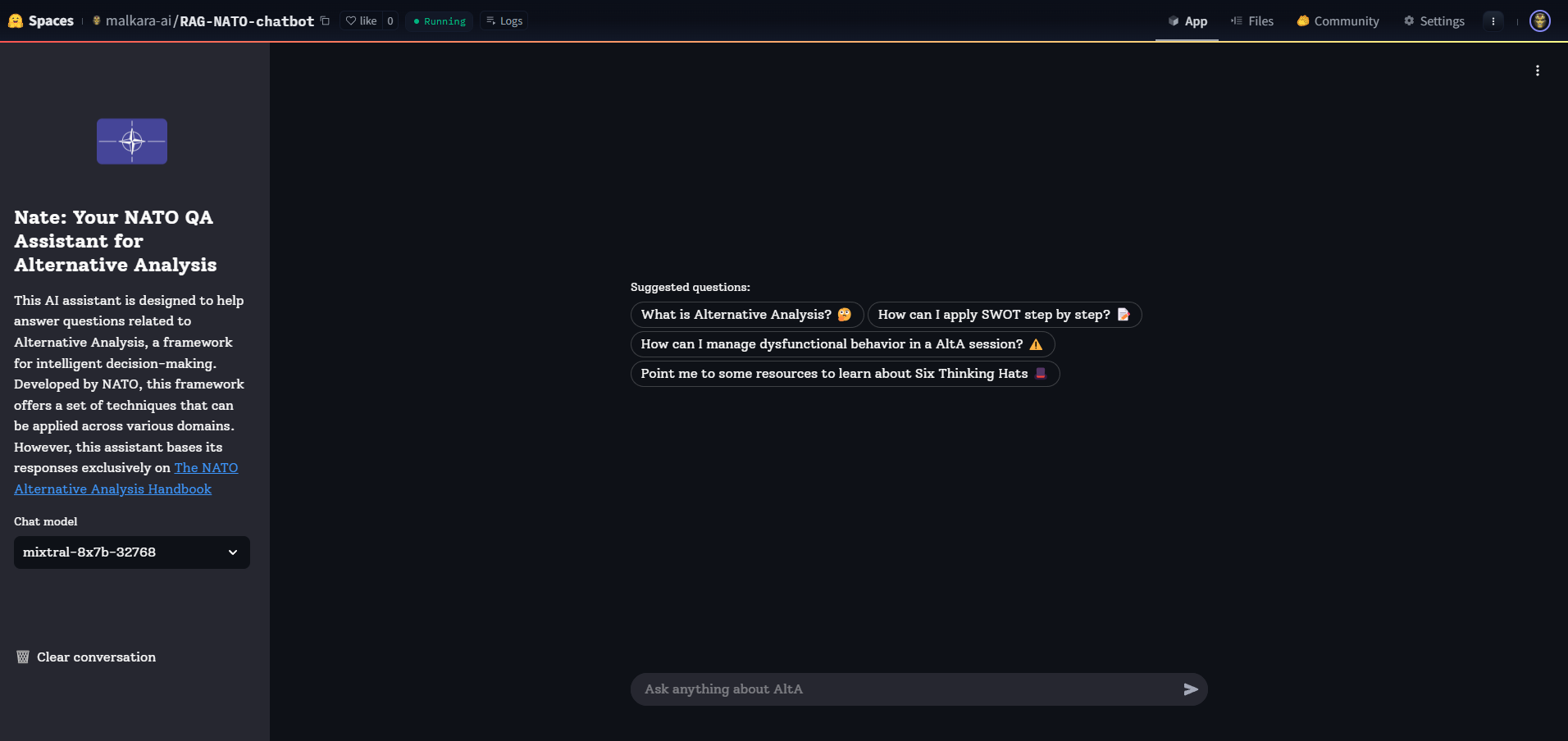

I recently built an AI-powered virtual assistant specializing in NATO’s Alternative Analysis (AltA) methodology. This chatbot leverages Large Language Models (LLMs) combined with Retrieval-Augmented Generation (RAG) to provide accurate responses to questions that general-purpose models might struggle with. The system is built using LangChain, Chroma, and the Groq API, while the user interface is developed with Streamlit. The application is deployed on Hugging Face Spaces as a Docker container. Check it out here.

Why This Project?

- Challenging and Niche – The specificity of this topic made the project uniquely demanding. A standard chatbot using only an LLM would struggle due to the lack of specialized knowledge.

- Background in Psychology – My interest in human decision-making naturally led me to this project.

- Interest in Technology & Military Topics – This project provided an opportunity to explore NATO methodologies while enhancing my technical skills.

Development workflow

1. Extracting and preprocessing text

-

The first step was obtaining and converting the AltA methodology handbook from PDF to plain text.

-

Parsing the PDF was particularly challenging due to its structured content but complex formatting.

-

Initially, I used PyPDF with custom preprocessing functions, but the results were suboptimal—some words were broken due to line breaks, and the hierarchical structure was lost.

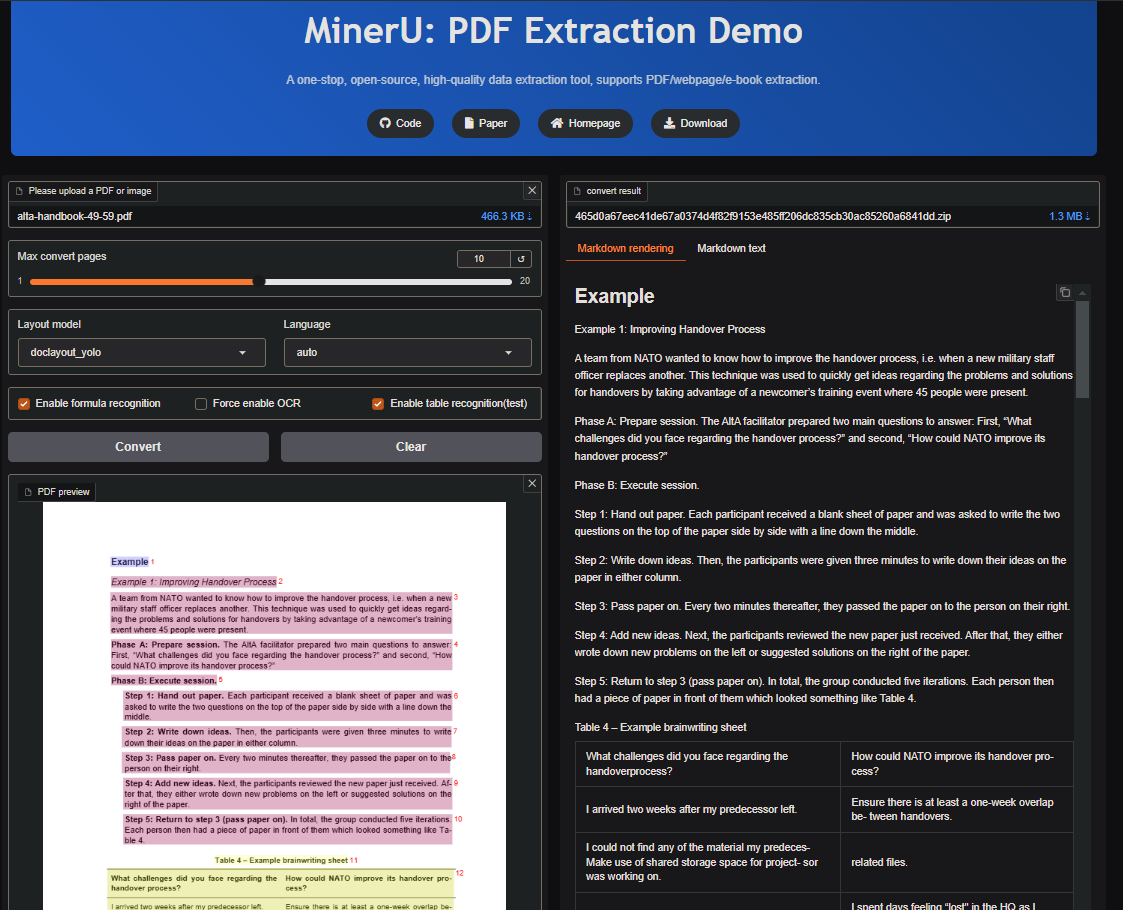

-

Fortunately, I discovered MinerU, a recently developed tool for PDF parsing, which significantly improved extraction quality. This tool preserved the original structure and formatted content into markdown, making the next steps much smoother (chek it out here, it’s pretty good!).

2. Chunking & vectorization

The extracted text needed to be divided into meaningful chunks before being transformed into vector representations for retrieval. Early on, this was a complex process:

# Splitter applied to chapters dedicated to techniques

techniques_splitter = RecursiveCharacterTextSplitter(

separators=['\n\nWhat to use it for\n', '\n\nApplication\n', '\n\nExample', '\n\nChallenges', '\n\nHints and tips', '\n\nFurther reading', '\n\n'],

keep_separator='start',

chunk_size=300,

chunk_overlap=20,

length_function=len,

add_start_index=True,

)

# Splitter applied to chapter dedicated to glossary

glossary_splitter = RecursiveCharacterTextSplitter(

separators=['\n\n'],

keep_separator='end',

chunk_size=300,

chunk_overlap=0,

length_function=len,

add_start_index=True,

)

However, with MinerU, the process was dramatically simplified:

headers_to_split_on = [

("#", "Topic 1"),

("##", "Topic 2"),

("###", "Topic 3"),

]

markdown_splitter = MarkdownHeaderTextSplitter(headers_to_split_on, strip_headers=False)

md_header_splits = markdown_splitter.split_text(markdown_document)[6:] # Discard first 6 chunks (cover, index)

This improved retrieval quality, especially for single-topic queries. Additionally, switching to Gemini 1.5 for embeddings further enhanced performance, replacing initial attempts with Sentence Transformers models.

3. Building the RAG system

- The user query is used to search for the k most relevant vectors in the database.

- These retrieved vectors serve as context for the LLM, ensuring accurate responses.

- Prompt tuning was crucial for improving results.

- I selected Mixtral-8x7B (from Groq API) as the core LLM due to its strong performance (there other models available in the app if you want to play with them). (*Update: a few weeks after deploying the app, Mixtral-8x7B was deprecated in Groq, so it no longer appears as an option)

One key challenge was chat memory. In a naive RAG implementation, the chatbot does not retain conversational context. For example:

- User: “What is AltA?”

- User: “How can I apply it?”

- The system fails to understand that “it” refers to AltA.

To fix this, I implemented memory-aware retrieval using a rephraser LLM that reformulated queries by incorporating recent conversation history.

rephrase_template = """You are a query rephraser. Given a chat history and the latest user query, rephrase the query if it implicitly references topics in the chat history.

If the query does not reference the chat history, return it as is. Do not provide explanations, just return the rephrased or original query.

Chat history:

{chat_history}

Latest user query:

{input}

"""

With this improvement, the chatbot correctly reformulates ambiguous queries before retrieving relevant information.

Deployment & UI

I used Streamlit for the UI, making development straightforward despite some challenges when testing in Google Colab.

For deployment, I opted for an approach with intermediate difficulty:

- I created a dockerfile and uploaded all the necessary files to Hugging Face Spaces, where a docker container with the packaged application was automatically built and deployed.

- This approach offers more flexibility than Streamlit Cloud, making it reusable for future projects.

Final thoughts

This project was an exciting dive into RAG-based chatbots, PDF parsing, and deployment strategies. It also reinforced the importance of structuring and preprocessing text efficiently for high-quality retrieval. Future improvements could include fine-tuning models further and experimenting with alternative memory mechanisms.

🚀 Try the chatbot here!

The source code, data and notebooks using for this project can be found here