Simulation of QUARTO! board game and minimax algorithm

Final project of the “Simulation techniques” subject in the Master in Methodology for behavioral sciences. For this assignment we were given a short description of the QUARTO! board game rules (slightly modified) and a clear objective: to simulate it in R and to design a game strategy. The performance of the game strategy had to be better than a strategy based on random selection of moves.

Game description

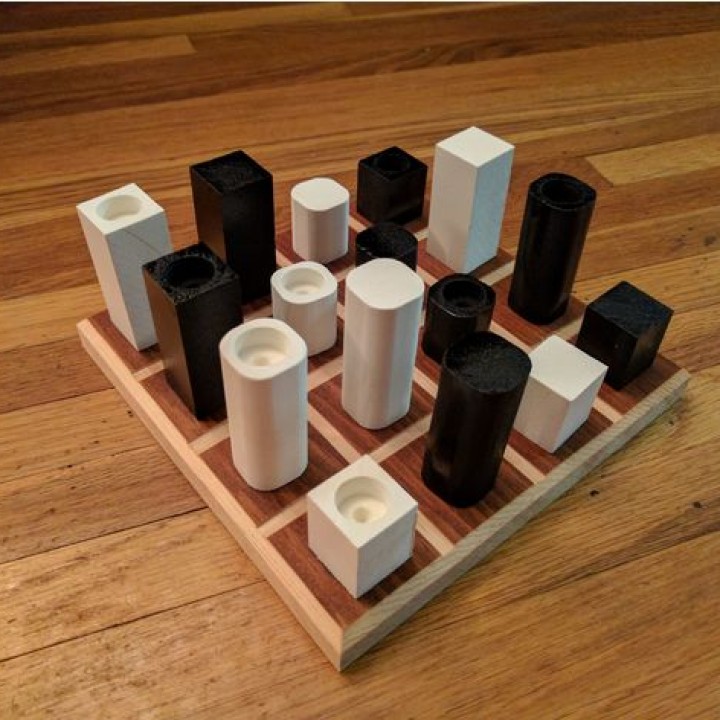

The rules of this strategy game are easy: “The wooden pieces have four characteristics: light/dark, tall/short, square/round, or hollow/filled. Each turn, an opponent chooses which piece you play; if you form a line of four pieces with one characteristic in common, you win. If you can’t, you choose a piece for your opponent to play. The game progresses until a winner is decided or all pieces have been played.” (Source:www.hammacher.com)

However, in our case the rules were slightly modified. The player doesn’t choose the opponent’s pieces, she chooses her own pieces. That change makes the game more similar to tic-tac-toe or connect 4.

QUARTO! in code

How can the game be represented in R?

- Pieces: There are $2^4=16$ different pieces that result from the combination of different properties (2 different properties for each of the 4 attributes). Because the specific property or attribute the piece has doesn’t make any difference to the game, I decided to represent the set of all pieces as a 16 x 4 numeric matrix.

# expand.grid does the same but it's a bit slower

create.pieces <- function(){

return(cbind(rep(1:2,each=8,times=1),

rep(3:4,each=4,times=2),

rep(5:6,each=2,times=4),

rep(7:8,each=1,times=8)))

}

create.pieces()

[,1] [,2] [,3] [,4]

[1,] 1 3 5 7

[2,] 1 3 5 8

[3,] 1 3 6 7

[4,] 1 3 6 8

[5,] 1 4 5 7

[6,] 1 4 5 8

[7,] 1 4 6 7

[8,] 1 4 6 8

[9,] 2 3 5 7

[10,] 2 3 5 8

[11,] 2 3 6 7

[12,] 2 3 6 8

[13,] 2 4 5 7

[14,] 2 4 5 8

[15,] 2 4 6 7

[16,] 2 4 6 8

- Board: The board must be able to store information about position, but also about the attributes of every piece, because they are all different. For that reason I created a 3D board, an array of dimensions 4 x 4 x 4:

board <- array(0, dim = c(4,4,4))

, , 1

[,1] [,2] [,3] [,4]

[1,] 0 0 0 0

[2,] 0 0 0 0

[3,] 0 0 0 0

[4,] 0 0 0 0

, , 2

[,1] [,2] [,3] [,4]

[1,] 0 0 0 0

[2,] 0 0 0 0

[3,] 0 0 0 0

[4,] 0 0 0 0

, , 3

[,1] [,2] [,3] [,4]

[1,] 0 0 0 0

[2,] 0 0 0 0

[3,] 0 0 0 0

[4,] 0 0 0 0

, , 4

[,1] [,2] [,3] [,4]

[1,] 0 0 0 0

[2,] 0 0 0 0

[3,] 0 0 0 0

[4,] 0 0 0 0

Moving a piece to a certain position in the board is now as easy as doing this:

fourth_piece <- create.pieces()[4,] # [1 3 6 8]

board[2,2,] <- fourth_piece

, , 1

[,1] [,2] [,3] [,4]

[1,] 0 0 0 0

[2,] 0 1 0 0

[3,] 0 0 0 0

[4,] 0 0 0 0

, , 2

[,1] [,2] [,3] [,4]

[1,] 0 0 0 0

[2,] 0 3 0 0

[3,] 0 0 0 0

[4,] 0 0 0 0

, , 3

[,1] [,2] [,3] [,4]

[1,] 0 0 0 0

[2,] 0 6 0 0

[3,] 0 0 0 0

[4,] 0 0 0 0

, , 4

[,1] [,2] [,3] [,4]

[1,] 0 0 0 0

[2,] 0 8 0 0

[3,] 0 0 0 0

[4,] 0 0 0 0

To avoid mistakes and using the same piece or position twice, we need to keep track of the available pieces and positions. We need an object for both. We saw the pieces can be stored in a matrix, and that’s also possible with the available moves.

pieces <- create.pieces()

( positions <- cbind(1:4,rep(1:4,each=4)) )

[,1] [,2]

[1,] 1 1

[2,] 2 1

[3,] 3 1

[4,] 4 1

[5,] 2 2

[6,] 3 2

[7,] 4 2

[8,] 1 3

[9,] 2 3

[10,] 3 3

[11,] 4 3

[12,] 1 4

[13,] 2 4

[14,] 3 4

[15,] 4 4

A complete move by one player could be like this:

board <- array(0,c(4,4,4))

positions <- cbind(1:4,rep(1:4,each=4))

pieces <- create.pieces()

# choose piece and position

pos <- positions[5,] # 1 x 2 vector

piece <- pieces[4,] # 1 x 4 vector

# move piece to position

board[pos[1],pos[2],] <- piece

# update remaining pieces and positions

positions <- positions[-5,] # 15 x 2 matrix

pieces <- pieces[-4,] # 15 x 2 matrix

Now we are capable a simulating a complete game (link to code at the end), but the way players choose their pieces and moves is totally random at this moment.

Game strategy: Minimax and alpha-beta

In order to create an artificial agent that plays rationally I decided to implement the minimax algorithm with alpha-beta pruning. Technically they are different algorithms, but I consider the latter as an improvement or extension of the former.

And how do they work? Good explanations can be found in Russell & Norvig (2020) and Wikipedia:

- Minimax: “Given a game tree, the optimal strategy can be determined from the minimax value of each state in the tree (…). The minimax value is the utility (for player A, for example) of being in the corresponding state, assuming that both players play optimally from there to the end of the game.” The utility given by the payoff function “indicates how good it would be for a player to reach that position”.Obviously, “The minimax value of a terminal state is just its utility” (score associated with win, loss, draw or other complex states). Minimax works by choosing the next move based on these utilities.

So, for instance, “If player A can win in one move, his best move is that winning move. If player B knows that one move will lead to the situation where player A can win in one move, while another move will lead to the situation where player A can, at best, draw, then player B’s best move is the one leading to a draw. Late in the game, it’s easy to see what the “best” move is. The Minimax algorithm helps find the best move, by working backwards from the end of the game. At each step it assumes that player A is trying to maximize the chances of A winning, while on the next turn player B is trying to minimize the chances of A winning (i.e., to maximize B’s own chances of winning).”

- Alpha-beta pruning: Minimax is a recursive algorithm that works by searching or exploring the states of the game tree. That becomes a problem even with moderately complex games because “the number of game states is exponential in the depth of the tree”. That means that “raw” or naïve minimax is often impractical. However, we can use the technique of alpha-beta pruning to prune (leave unexplored) “large parts of the tree that make no difference to the outcome”. It’s basically the same as minimax but “It stops evaluating a move when at least one possibility has been found that proves the move to be worse than a previously examined move. Such moves need not be evaluated further.”

I’ve fundamentally based the implementation of the algorithm on the pseudocode provided by Russell & Norvig (2020). However, it’s not exactly the same. I reproduce here the pseudocode that best represents the program I designed, and again, the link to the actual code can be found at the end of the post.

FUNCTION AB_MINIMAX(board,ply,player,positions,pieces,depth=0,alpha=-infinity,beta=infinity)

best = list(value,pos,piece)

terminal_state = is_terminal(board,ply+depth)

IF terminal_state OR depth == 4 THEN

best.value = payoff(terminal_state,player,depth)

RETURN best

ELSE

IF player == 1 THEN

v = -infinity

FOR each position in positions

FOR each piece in pieces

updated = result(board,positions,position,pieces,piece)

output = AB_MINIMAX(updated.board,ply,-player,

updated.positions,updated.pieces,

depth+1,alpha,beta)

IF output.value > v THEN

v = output.value

best.value = output.value

best.pos = position

best.piece = piece

alpha = max(alpha,v)

ENDIF

IF v >= beta THEN

RETURN best

ENDIF

ENDFOR

ENDFOR

ELSE

v = infinity

FOR each position in positions

FOR each piece in pieces

updated = result(board,positions,position,pieces,piece)

output = AB_MINIMAX(updated.board,ply,-player,

updated.positions,updated.pieces,

depth+1,alpha,beta)

IF output.value < v THEN

v = output.value

best.value = output.value

best.pos = position

best.piece = piece

alpha = max(alpha,v)

ENDIF

IF v <= alpha THEN

RETURN best

ENDIF

ENDFOR

ENDFOR

ENDIF

ENDIF

ENDFUNCTION

The issue of efficiency

I originally tried to make everything in R, but I quickly noticed that the program was terribly slow. To reduce the running time I decided to take advantage of the package rcpp and I re-wrote almost everything in C++ code. The improvement was remarkable. Here you can see the time needed to make a move after 12 “plies” or turns:

microbenchmark::microbenchmark(

'R'={AB_MINIMAX_R(demo$board,ply=13,player=1,demo$positions_left,demo$pieces_left)},

'C++'={AB_MINIMAX(demo$board,ply=13,player=1,demo$positions_left,demo$pieces_left)}

)

Unit: microseconds

expr min lq mean median uq max neval cld

R 13242.1 14604.3 20137.87 16809.8 22100.9 57384.4 100 b

C++ 259.1 296.1 375.22 322.5 407.2 1144.8 100 a

Link to code.

Link to the report.

Comments

- Human vs. “machine”: Eventhough I didn’t mention it, the game has been designed so that it can be played by a real human. I’ve made a second version of the main program with an user interface that has been modified to be more “attractive” and less confusing (converting the 3D board to a bidimensional matrix, for example).

References

Russell, S. & Norvig, P. (2020). Adversarial Search and Games. In S. Russell y P. Norvig, Artificial Intelligence: a modern approach (4a ed) pp.(146-180). Pearson.