Recognizing facial expression with eigenfaces

Building of a simple facial expression recognition system based on Singular Value Decomposition.

Description

Convolutional neural networks are the state of the art in virtually all tasks related with object recognition. However it’s worth visiting some of the older techniques used in this area.

In 1991, Turk & Pentland proposed the “eigenface” approach, which is based on matrix factorization, specifically the Singular Value Decomposition (SVD), one the fundamental matrix factorization techniques in linear algebra. And also one of the most widely used.

In this project I’ve tried to implement that strategy. I’ve added an additional goal. The aim is to recognize facial expressions, not just faces as a whole.The dataset I’m using for this purpose was created by Ebner et al. (2010).

I’ve covered the details in this report, but the main ideas are the following:

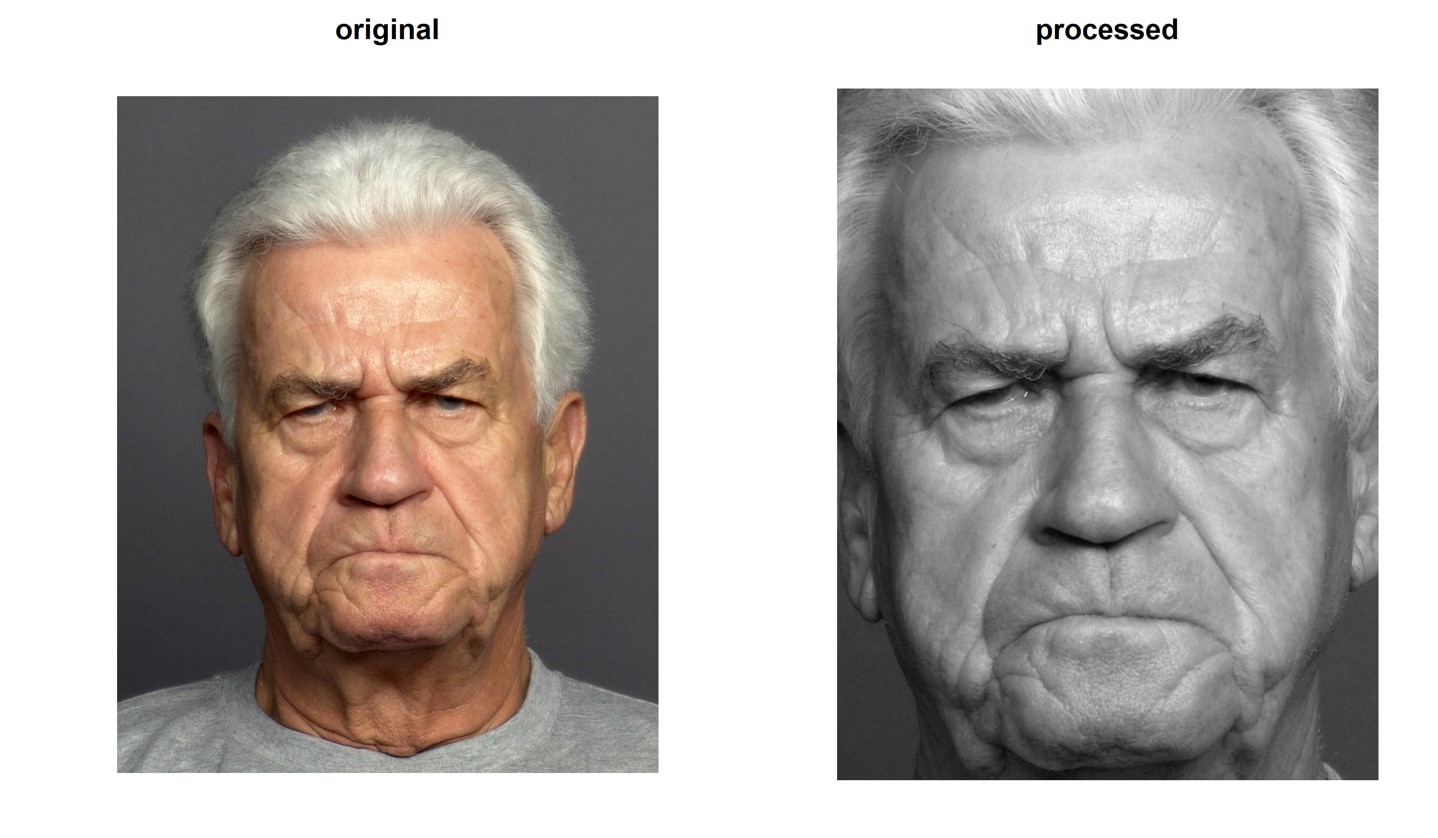

- Edit the photos. Here that means converting them to grayscale (so that when we transform them in matrices we have only two dimensions), reducing the resolution (smaller images, less computational cost) and cropping them (in order to remove uninteresting parts, like the background or the hair).

-

Reshape each image into a p $\times$ 1 vector (p = width · height). We can arrange the n pictures to form the matrix ${\bf X}_{p\times n}$

-

Split the data into train and test set. In this case, every person took two photos. We have a version of every facial expression of each person, so the split is easy and we get two equivalent sets.

-

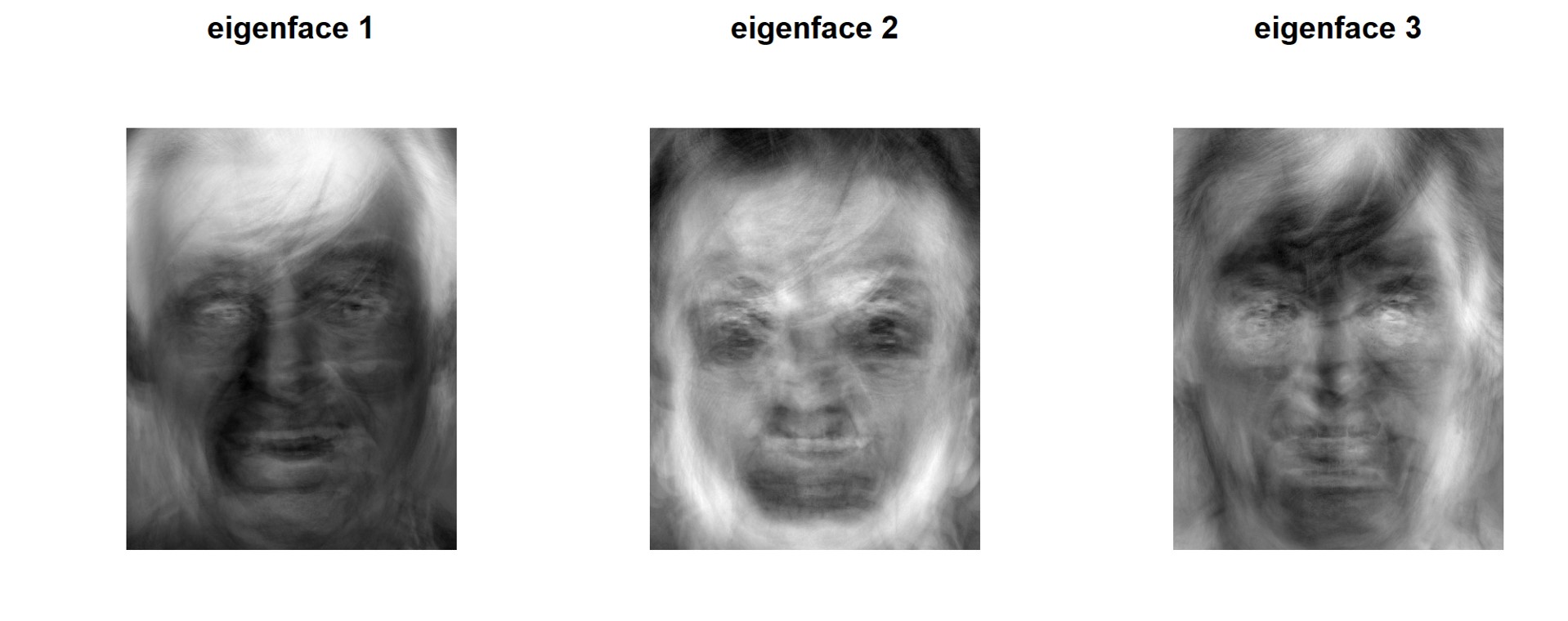

Perform SVD (details in the report) on the training data. In essence, it will decompose the original matrix into 3 matrices. In this case, the first one contains the eigenvectors (eigenfaces). “These eigenvectors can be thought of as a set of features that together characterize the variation between face images.” (Turk & Pentland, 1991). “Some principal components may capture the most common features shared among all human faces, while other principal components will be more useful for distinguishing between individuals. Additional principal components may capture differences in lighting angles.” (Brunton & Kutz, 2020). The choice of the number of principal components/eigenfaces to keep depends on the analyst. I chose to keep 10 (out of 36)

Here are some examples of the eigenfaces generated (when reshaped and converted back to images)

I know, it’s a bit creepy.

- Create a classifier. In order to do that, we project all the images in the new low-dimensional space of faces. That basically means obtaining their representation in the new space. We do the same with a new image. We then compare this representation with each of the projected train images (with the euclidean distance). The decision rule is to match the new presented image with the most similar image from the training set.

The results were: accuracy of 1 when the task was to match persons (ignoring everything else), accuracy of 0.61 when the correct match of facial expression was evaluated. The results are not that bad, considering the small sample size and that the expected accuracy for the facial expression matching task when choosing at random is 1/6 (approx. 0.16)

In the report I add some extra information if you want to know more. The code is available here.

References

Brunton, S. L., & Kutz, J. N. (2020). Singular Value Decomposition (SVD). In S.L. Brunton & J.N. Kutz, Data-driven science and engineering: Machine learning, dynamical systems, and control, (pp. 1-47). Cambridge University Press.

Ebner, N., Riediger, M., & Lindenberger, U. (2010). FACES—A database of facial expressions in young, middle-aged, and older women and men: Development and validation. Behavior research Methods, 42, 351-362. doi:10.3758/BRM.42.1.351.

Turk, M., & Pentland, A. (1991). Eigenfaces for recognition. Journal of cognitive neuroscience, 3(1), 71-86.